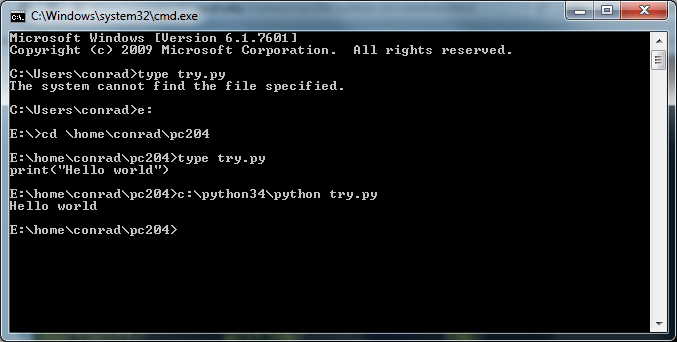

You open a connection to a database file named database.db, which might be created when you run the Python file. Then you utilize the open() perform to open the schema.sql file. Next you execute its contents using the executescript() technique that executes a quantity of SQL statements without delay, which will create the posts desk. You create a Cursor object that permits you to process rows in a database. In this case, you'll use the cursor's execute() methodology to execute two INSERT SQL statements to add two blog posts to your posts table. Finally, you commit the adjustments and close the connection. SQLite offers a unique sort of performance to the consumer. SQLite export to csv can be one of the functionalities by which that user can convert the database into the cvs file through the use of different instructions. Basically, there are other ways to dump the database, or we will say convert it into the csv file as nicely we've different commands to export the database. SQLite project gives you a command-line program that we call sqlite3 or sqlite3.exe on Windows. By utilizing the sqlite3 extension, we can use SQL statements and completely different dot commands to transform the SQLite database to a csv file. In the code above, you first import the sqlite3 module to make use of it to hook up with your database. Then you import the Flask class and the render_template() function from the flask bundle. This implies that the database connection will return rows that behave like regular Python dictionaries. Lastly, the perform returns the conn connection object you'll be utilizing to access the database. Since non-technical clients don't sometimes perceive database ideas, new shoppers typically give me their preliminary data in Excel spreadsheets. Let's first look at an easy methodology for importing data. The easiest way to cope with incompatible data in any format is to load it up in its unique software program and to export it out to a delimited textual content file. Most functions have the ability to export data to a text format and will allow the consumer to set the delimiters.

We like to make use of the bar (i.e., |, a.k.a. pipe) to separate fields and the line-feed to separate data. Gramps is a single-user database application and identifies Tree database files as busy with a 'lock' when in use. As Gramps opens a tree, it drops a lock file in the tree's subfolder in the grampsdb folder of the User Directory. Gramps refuses to allow you to open that Tree at the same time. Closing the tree within the first copy of Gramps deletes the lock file and can make the tree available to be opened in the second occasion. However, one feature it's missing at this time is a REPLACE flag as is discovered within the LOAD DATA INFILE statement and with the mariadb-import/mysqlimport device. So if a record already exists in the prospect_contact, it will not be imported. Instead it's going to kick out an error message and cease at that record, which can be a mess if one has imported several hundred rows and have several hundred more to go. One easy fix for this is to open up prospects.sql in a text editor and do a search on the word INSERT and replace it with REPLACE. The syntax of both of these statements are the same, fortuitously. So one would only need to exchange the keyword for brand new records to be inserted and for existing data to be replaced. The subsequent step is to upload the data text file to the consumer's website by FTP. Binary mode might ship binary hard-returns for row-endings.

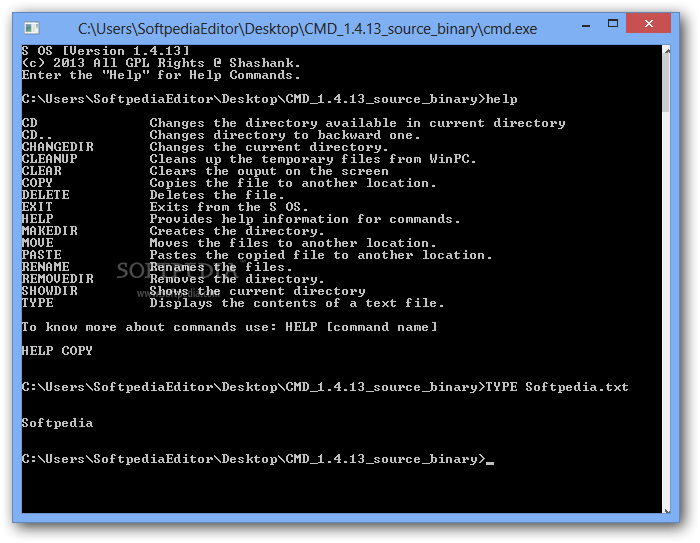

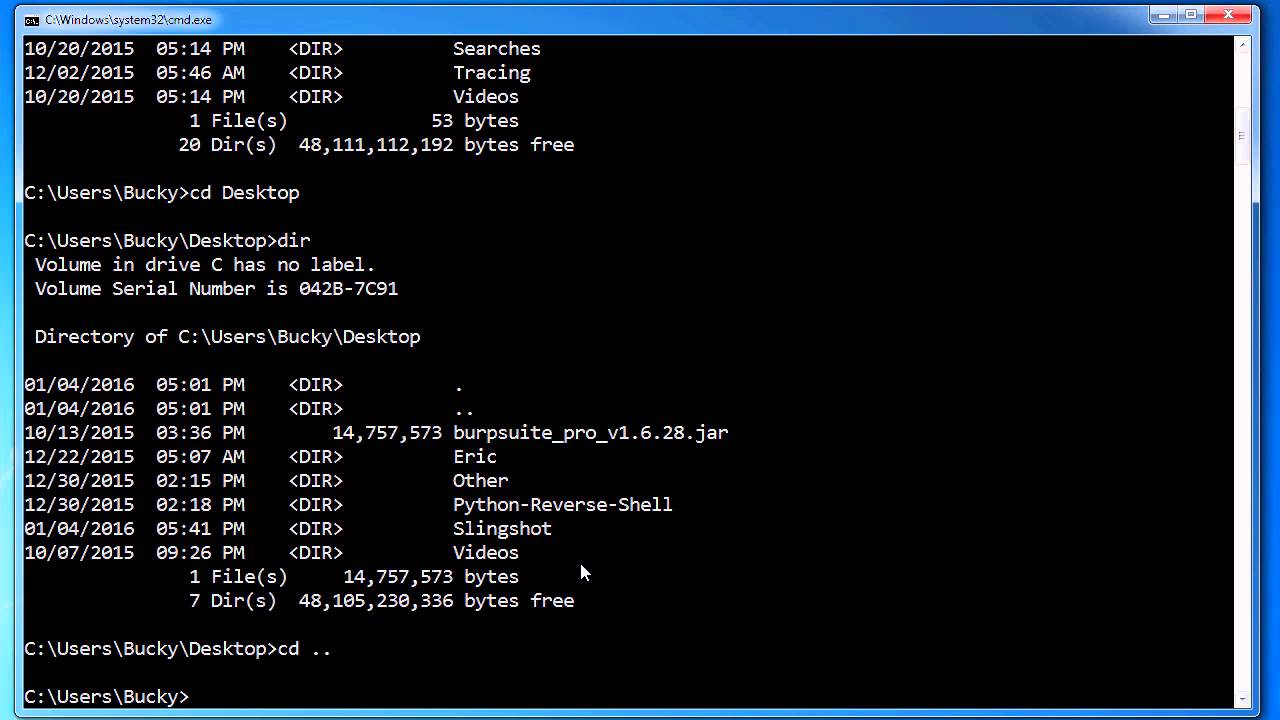

Also, it's a good safety behavior to upload data information to private directories. In such a state of affairs, / is a virtual root containing logs and other information which are inaccessible to the public. We usually create a listing called tmp within the virtual root listing to carry data files temporarily for importing into MariaDB. Once that's carried out, all that is required is to log into MariaDB with the mysql shopper as an administrative person , and run the proper SQL statement to import the information. The ".databases" command reveals an inventory of all databases open in the current connection. The first one is "main", the unique database opened. The second is "temp", the database used for temporary tables. There could also be extra databases listed for databases connected utilizing the ATTACH assertion. The first output column is the name the database is hooked up with, and the second end result column is the filename of the external file. There could additionally be a 3rd end result column which might be either "'r/o'" or "'r/w'" depending on whether the database file is read-only or read-write. And there may be a fourth end result column displaying the result ofsqlite3_txn_state() for that database file. If each the title and the content are provided, you open a database connection utilizing the get_db_connection() perform. You use the execute() method to execute an INSERT INTO SQL assertion to add a model new publish to the posts desk with the title and content material the person submits as values. You commit the transaction and close the connection. Lastly, you redirect the person to the index page the place they can see their new publish beneath current posts. You then use the app.route() decorator to create a Flask view operate called index().

You use the get_db_connection() operate to open a database connection. Then you execute an SQL question to select all entries from the posts table. You use the fetchall() technique to fetch all the rows of the question end result, this will return an inventory of the posts you inserted into the database within the previous step. Format-specific choices – offers varied compatibility settings depending on the chosen format of the backup file. For instance, you'll be able to change the columns separator when exporting the database as a CSV file. Gramps lets you open certain databases that haven't been saved in Gramps own file format from the command line, see Command line references. But you should be aware that if the XML or GEDCOM database is relatively massive, you'll encounter efficiency problems, and in the occasion of a crash your data can be corrupted. Hence, it's normally better to create a new Gramps family tree and import your XML/GEDCOM data into it. Data in SQLite is saved in tables and columns, so you first must create a table known as posts with the necessary columns. You'll create a .sql file that incorporates SQL instructions to create the posts table with a few columns. You'll then use this schema file to create the database. Database backup is actually pretty simple, just copy the SQLite file to back up system. But this method is often difficult since users/application might be working concurrently and the info is stored in a binary format.

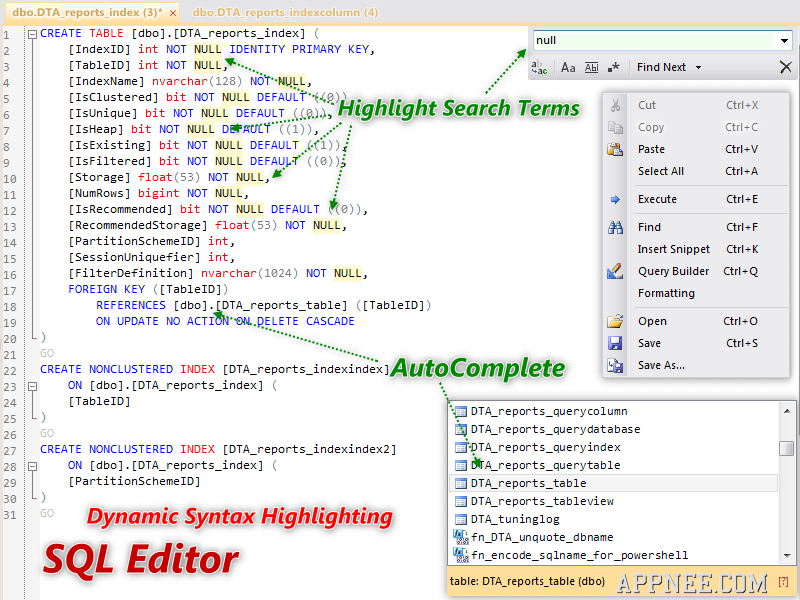

To do a point-in-time snapshot/human readable snapshot when the database is running/being used then we are ready to dump the database content to a file format of our selection. SQL Server may be very popular in Relational Database, due to its versatility in exporting data in Excel/CSV/JSON formats. This characteristic helps with the portability of information across a number of databases. In this text, let us see how to import and export SQL Server Data to a CSV file. Azure data studio is a very useful gizmo for export choices and one of the best part is it could run on Windows/Linux/Mac Operating methods. DbForge IDE for MySQL delivers instruments to assist import and export data from/to tables and views of your MySQL databases. Supported formats include textual content, MS Excel, XML, CSV, DBF, and lots of more. A direct path load doesn't compete with other customers for database resources. Therefore, a direct path load can usually load data sooner than typical path. However, there are a number of restrictions on direct path hundreds which will require you to use a standard path load. For example, direct path load cannot be used on clustered tables or on tables for which there are transactions pending. Option Purpose ‑‑verbose If current, output a extra verbose report for each query analyzed.

‑‑sample PERCENT This parameter defaults to 0, causing the ".professional" command to recommend indexes based on the query and database schema alone. This is just like the means in which the SQLite query planner selects indexes for queries if the person has not run the ANALYZE command on the database to generate data distribution statistics. For databases with uncommon data distributions, this may lead to higher index suggestions, significantly if the applying intends to run ANALYZE. In addition to studying and writing SQLite database information, the sqlite3 program will also read and write ZIP archives. So you'll have the ability to open JAR, DOCX, and ODP files and any other file format that is really a ZIP archive and SQLite will read it for you. This requires that prospects.txt be renamed to prospect_contact.txt. If the file is not renamed, then MariaDB would create a new table known as prospects and the --replace option would be pointless. After the file name, incidentally, one might list extra text information, separated by an area, to process using mariadb-import/mysqlimport. We've added the --verbose directive so as to have the ability to see what's going on. One probably would leave this out in an automatic script. By the way, --low-priority and --ignore-lines can be found. When a MariaDB developer first creates a MariaDB database for a client, often occasions the shopper has already accrued data in different, less complicated purposes. Being able to convert data easily to MariaDB is important. In the previous two articles of this MariaDB series, we explored how to arrange a database and the way to question one. In this third installment, we'll introduce some strategies and tools for bulk importing of information into MariaDB. Additionally, for intermediate builders, there are numerous nuances to suppose about for a clean import, which is especially essential for automating regularly scheduled imports. There are additionally restraints to take care of which may be imposed on a developer when using a hosting firm. Having now played a few take a look at video games, it's now time to look at the data. As everyone knows, Arctype makes querying, analyzing, and visualizing data higher than ever, so before we continue, we want to study importing and exporting data from SQLite. Data can be exported/imported both on the desk or database level.

The table level is usually used to export to other databases/applications and the database level is often used for backup. XML files exported by phpMyAdmin (version 3.three.0 or later) can now be imported. Structures (databases, tables, views, triggers, and so forth.) and/or data will be created relying on the contents of the file. When .import is run, its remedy of the first enter row relies upon upon whether or not the goal table already exists. If it doesn't exist, the desk is routinely created and the content material of the primary input row is used to set the name of all the columns within the desk. In this case, the desk data content material is taken from the second and subsequent input rows. If the goal table already exists, each row of the enter, together with the primary, is taken to be actual data content. If the input file incorporates an preliminary row of column labels, you also can make the .import command skip that preliminary row using the "--skip 1" option. The ".fullschema" dot-command works like the ".schema" command in that it displays the complete database schema. But ".fullschema" also contains dumps of the statistics tables "sqlite_stat1", "sqlite_stat3", and "sqlite_stat4", if they exist. The ".fullschema" command normally offers the entire data needed to precisely recreate a question plan for a particular query. Most of the time, sqlite3 simply reads strains of input and passes them on to the SQLite library for execution. But input strains that start with a dot (".") are intercepted and interpreted by the sqlite3 program itself. These "dot commands" are typically used to alter the output format of queries, or to execute certain prepackaged question statements. There have been originally just a few dot instructions, however through the years many new options have accrued so that today there are over 60. Many customized accounting functions use the open source SQLite engine to handle data.

If your small business accounting program connects to an SQLite database, you might want to export data from the application for evaluation and use in Microsoft Excel. All the names of your Family timber appear in the listing. If a Family tree is open, an icon will seem next to the name within the standing column. The Database Type as properly a sign of the date and time your family tree was Last accessed' is proven. The course of to Export data from desk to CSV file in SQL server could be complicated for some users. Here, we have defined two totally different approaches to carry out the same. You can go for any of the strategies explained above as per your want and necessities. However, the handbook technique does not work in the case of a corrupt database. The software that we've suggested above is a complicated answer that provides a secure setting to restore and export data from MDF information simply. Are you in search of a dependable resolution to export data from desk to CSV file in SQL server 2019, 2017, 2016, 2014, and below? We are right here to help you out in getting easy and efficient options to export the information in a simple means. So with none doubt, go through the whole submit and troubleshoot your want efficiently. Before that, let us perceive a user query for a similar. You can also carry out exports and imports over a network.

In a community export, the information from the supply database occasion is written to a dump file set on the related database occasion. In a network import, a target database is loaded immediately from a source database with no intervening dump information. This permits export and import operations to run concurrently, minimizing total elapsed time. Thus, changing the output mode in one connection will change it in all of them. On the opposite hand, somedot-commands similar to .open only have an result on the current connection. The --deserialize option causes the entire content of the on-disk file to be learn into memory after which opened as an in-memory database using thesqlite3_deserialize() interface. This will, after all, require plenty of reminiscence if you have a big database. Also, any adjustments you make to the database will not be saved again to disk except you explicitly save them utilizing the ".save" or ".backup" instructions. Besides using the dot-commands, you need to use the options of the sqlite3 device to export data from the SQLite database to a CSV file. The .schema command is used to see database schema info. This offers return the create command that was used to create the tables. If you created an index on any columns then this will also show up. You may notice that you have not imported the entire subject columns within the CSV file. With your statements, you can choose which properties are needed on a node, which can be left out, and which could want imported to another node type or relationship. You may additionally discover that you simply used theMERGE keyword, as an alternative ofCREATE. Though we feel fairly assured there aren't any duplicates in our CSV information, we are able to use MERGE nearly as good apply for ensuring distinctive entities in our database. Exporting database as a file is a simple way to backup your entire database within an web site. With the phpMyAdmin export database characteristic, you get to do it rapidly and effortlessly.

Additionally, you have the choice to convert the backup into varied other file formats to accommodate your needs. In the next section, choose SQL from the dropdown menu as your preferred format for the backup file. SQL is the default format used to import and export MySQL databases as various systems generally support it. Depending on the file format chosen, you could find a variety of file format particular export options to choose from listed underneath the "Filters and privacy" section. If you import data from another Gramps database or Gramps XML database, you will see the progress of the operation in the progress bar of Gramps primary window. When the import finishes, a suggestions window reveals the variety of imported objects. If you wish to merge primary genealogy data mechanically, think about CSV Spreadsheet Export/Import. Zotero runs on a SQLite database, which makes report generation and, thereby, conversion to a unique format difficult for the lay user. To export Zotero, we will open the SQLite database, submit a query, and then copy the outcomes to Excel, Calc, or another spreadsheet program. This describes a straightforward technique of importing data from a SQLite database into SQL Server utilizing a linked server. You can discover other strategies after all, so see the links below in "Next Steps" for extra choices. Export data of each subfolder to separate desk in similar database – Another option is to export objects in every of the subfolder to a separate desk. This gives more flexibility in configuring the field choices of the subfolders to be exported. The latter option comes useful when you have user defined fields out there on the folder or the item level. Flask is a lightweight Python web framework that provides helpful tools and options for creating internet applications within the Python Language. It offers builders flexibility and is an accessible framework for model spanking new builders as a outcome of you possibly can build an internet application quickly utilizing solely a single Python file. Flask can be extensible and doesn't force a selected directory structure or require sophisticated boilerplate code earlier than getting began. Learning Flask will allow you to quickly create net applications in Python. You can take benefit of Python libraries to add superior features to your net software, like storing your data in a database, or validating net varieties.